This IBM Think piece crystallized something I’ve been pondering: what technical skills will the next generation need when LLMs make precise coding knowledge increasingly redundant? (pondering as a result of a 13-year-old who wants to be an engineer:)

Mary Shaw from Carnegie Mellon University makes an observation that seems obvious once you hear it: programming has traditionally taught students to write before they read. That’s backwards from every other discipline. Writers study literature before publishing. Musicians learn repertoire before composing. Mathematicians read proofs before constructing their own. But we’ve thrown students into writing code before they’ve meaningfully read any.

AI seems to be forcibly correcting this. When a model generates functional code in seconds, the ability to produce code stops being the differentiator. The ability to read, evaluate, and verify code you didn’t write becomes the core skill.

This creates what I’d call the “Two Loops” problem. Traditional agile assumes one feedback loop: build, demo, incorporate feedback, repeat. But with LLM-generated code, you now have two loops that can drift apart. Loop one: are we capturing stakeholder intent in our prompts? Loop two: did the model actually implement that intent? These can diverge in ways agile isn’t designed to catch.

The critical skill shifts upstream. It’s no longer “can you implement this?” but “can you specify this precisely enough that a model won’t subtly misinterpret your intent?” The new bottleneck is prompt fidelity: does it faithfully represent intent encoded in natural language?

When humans write code, they can explain their reasoning. When models generate code in a fraction of the time, we do not currently have adequate capacity to validate rationale.

I suspect we’ll see specialized verification LLMs emerge: models assessing whether generated code aligns with prompt intent (assuming we solve the recursive challenge of trusting models to supervise models). Future DevOps pipelines might include stages we don’t have vocabulary for yet: prompt regression testing, semantic drift detection, intent-implementation alignment scoring.

The engineers who thrive won’t be the fastest coders. They’ll be the ones who can reliably detect when machine-generated code has subtly drifted from intent.

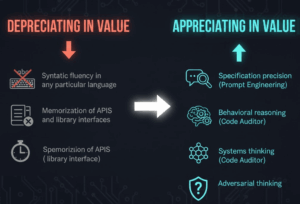

This will result in a depreciation in some skills and an appreciation in others.

Depreciating in Value:

Appreciating in Value:

I am not sure if this is basically a return to Systems Thinking, but for now, word to the wise: stay more curious about the whys than the hows!