This One Prompting Trick Transformed How I Use #GenAI and LLM. And I am not just talking about ChatGPT or #Claude.ai, this works for image generation, workflow diagramming AI- pretty much any prompt-driven AI tool I have used.

This One Prompting Trick Transformed How I Use #GenAI and LLM. And I am not just talking about ChatGPT or #Claude.ai, this works for image generation, workflow diagramming AI- pretty much any prompt-driven AI tool I have used.

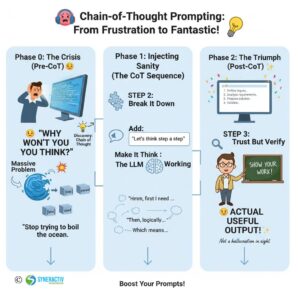

Frankly, LLMs can be frustrating when it comes to task involving even moderately complex reasoning. I had to minutely audit LLM output ranging from objective applications (complex and iterative code-flows, contract redlining, workflow mapping) to subjective applications (content development etc with some artistic liberty). The outputs were inconsistent, the logic was opaque. I couldn’t rely on the results for critical work without giving back a large chunk of the productivity gains in time-consuming AI output audits. Then I discovered ChainofThought (CoT) prompting, and everything changed.

Here’s what blew my mind: while LLMs aren’t actually built for traditional reasoning, this single prompting approach unlocks powerful pseudo-reasoning capabilities that deliver real business value.

Where I’ve Seen CoT work brilliantly, where normal prompts delivered subpar outcomes:

-Analyzing legal contracts and debugging complex code (syllogistic reasoning),

-Crafting RFP responses and developing strategic content (inductive, case-based reasoning),

The results? Night and day difference.

How I Implement CoT Prompting:

The approach is simple—I guide the LLM to “show its work” by breaking problems into logical steps.

My Three-Step Process:

1. Decompose the Problem I break down complex challenges into bite-sized subtasks. Sometimes I do this myself, sometimes I let the AI help me structure the breakdown. Either way works beautifully.

2. Prompt for Stepwise Reasoning I use two approaches depending on the situation:

Zero-shot CoT: I simply add “let’s think step by step” or “explain your reasoning” to my prompts—no examples needed

Few-shot CoT: When I need more guidance, I provide a sample reasoning chain so the model learns the exact logical pattern I want

3. Review the Reasoning Chain This is where the magic happens. The AI shows me every intermediate step in its thought process before landing on an answer. I can spot errors, verify logic, and trust the output because I can see exactly how it got there.

This can be transformative for mission-critical applications with complex reasoning requirements that have minimal margin for error like legal analysis, strategic planning, technical problem-solving. Elevate your prompting game with CoT!